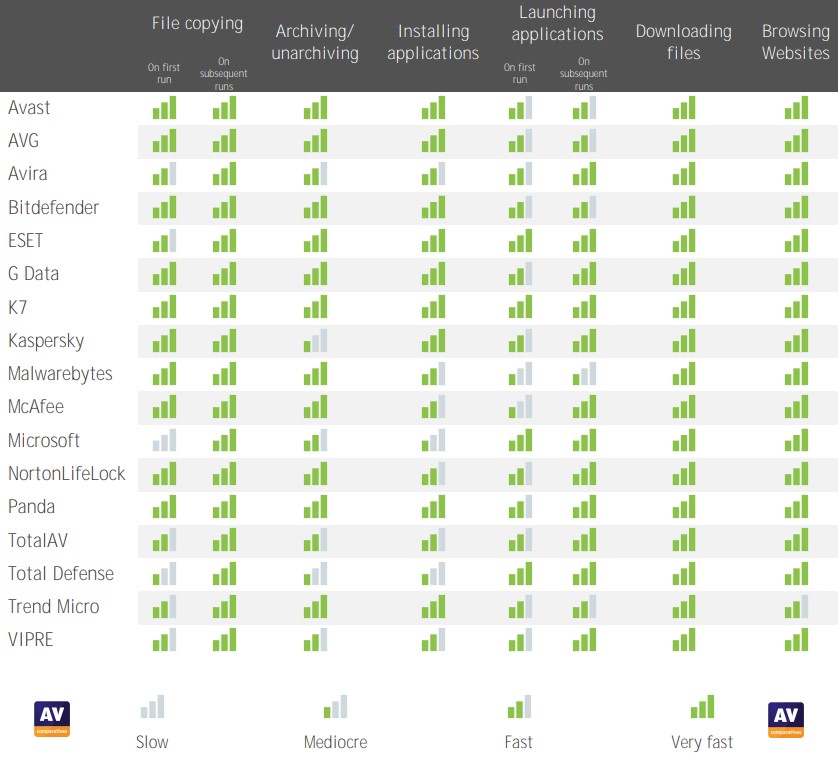

We have done it again. The AV-Comparatives performance tests consolidate Panda Free Antivirus as the top-performing program in its category.

As usual, the Austrian laboratory has analysed the 18 most relevant cybersecurity solutions on the market in its performance test. This test measures the impact of different cyber security software on the PC. In this case, to be as close as possible to a common user case, a low-end PC running Windows 10 with an i3 processor, 4GB of RAM and a solid-state hard drive was used.

The results, with Panda Free Antivirus in the lead, are as good as they are unsurprising. Because it is not the first time that our reliable free antivirus has proven to be the best performer.

These good results make us proud and encourage us to continue researching and developing our technology to bring you the latest in the world of online security. We are also happy to be helping to end the stigma that antivirus software slows down computers. As Panda Free has once again proven, performance and security are perfectly compatible. Even better: you can have both for free.

What AV-Comparatives’ Performance Tests measure

- File copying

Files are copied from one physical hard drive to another. Anti-virus software checks these files, but also uses the fingerprint to ignore files that have been scanned before.

- Archiving and unarchiving

This is of particular interest to those who work with the PC, as the archiving of work files is often slowed down when an antivirus scans their contents.

- Application installation

The programs that take part in this test are commonly used, and the time it takes to install them is measured.

- Application start-up

This test mainly involves common applications that users work with on their PCs. New Office documents (Word, Excel, PowerPoint) as well as PDFs in Adobe Acrobat Reade are opened and created. Reopening of these documents is also considered in this test, as users generally perform these operations repeatedly.

- File downloads

Common downloaded files hosted on an Internet server.

- Browsing websites

This test measures the time it takes to open, fully load and view top websites using the Google Chrome browser.

- PC Mark

Lastly, PC Mark, a benchmark that measures overall PC performance, is opened.

At Panda Security, we appreciate the trust our customers place in us every day. We continue our efforts to develop the best technology to protect your security with the best cybersecurity solution on the market.